One of our jobs at the C4DT Factory is to work on promising projects from our affiliated labs. This helps the faculties translate their research into formats accessible to different audiences. For a newly on-boarded project, we evaluate its current state and identify the required steps towards a final product. We may then also participate in the project’s further development. Either by directly contributing to the source code or by taking on an advisory role.

This shows the lab how to improve their workflow and how to close the gap with a real-world use case.

At the C4DT Factory, the work on these projects allows us to contribute to the latest EPFL research and communicate it to the outside world. We build our expertise and then share it with our partners through practical workshops and collaborative projects. It’s not just theoretical – one of our demonstrators was the catalyser to bring people together and jump-start the creation of a startup. This approach helps bridge the gap between academic research and real-world applications. And it creates value for our partners and the broader tech community.

A project may have an associated demonstrator for everybody to discover the technology behind it. We host these demonstrators and support them, in case libraries change or need updates. This also means that at some point we have to sunset them, lest we be choked by the need to support old code.

This summer two of our demonstrators and one market artifact reached end-of-live. We archived them on GitHub, but not without taking some screenshots first!

AT2 – Asset Management Transfer

Bitcoin was the first completely decentralized system which allowed digital assets to be securely sent without a central entity like a bank. Over time these assets became very valuable, but one of the main problems with Bitcoin and other blockchains remained: to secure the network, it relies on proof of work. This type of algorithms spend an enormous amount of energy to make sure that nobody can cheat the system and spend the same money twice.

To solve this problem, a research project by Prof. Rachid Guerraoui from the Distributed Computing Lab looked at the problem in a new way and discovered something very important. Proof of work is not needed to secure asset transfers, a much simpler algorithm can do the same. Using this research project, assets can be transferred over a network containing rogue participants. But it’s orders of magnitudes faster than Bitcoin and consumes a fraction of the energy, while keeping similar security promises.

AT2 achieves such speed by avoiding synchronizing a common state, the global consensus. It does so in favor of using a local consensus only. This isn’t the same at every node but does converge to a common state. This way, transactions can be processed as soon as they are happening. And the nodes don’t need to wait for the whole network to agree on what the correct ordering is.

|

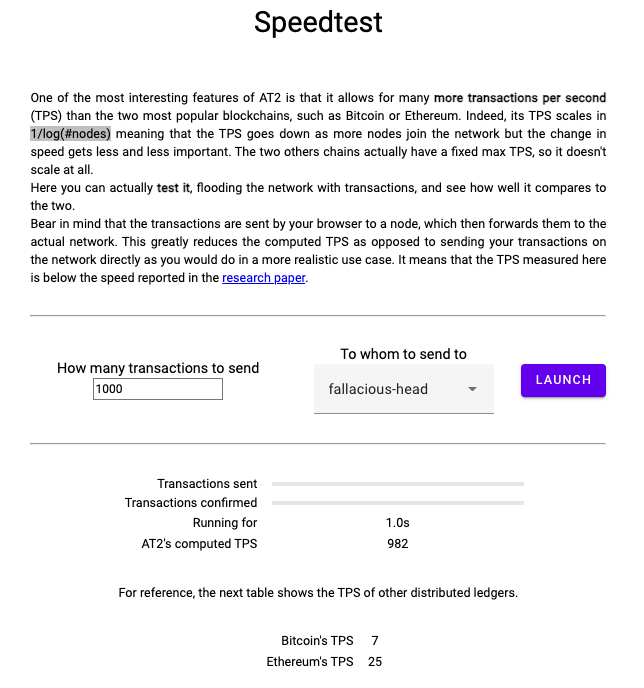

| The speedtest part of the demonstrator where you can measure throughput using AT2’s new consensus algorithm. |

In this demonstrator, you can create an account, which is credited with some initial assets. Then you can use it to send money to another account on the network, as you would do on other distributed ledgers. To show that AT2 is indeed blazingly fast, the demonstrator contains a speed test. With it you can send as many transactions as your balance allows. Then it measures how well the network handles the load. If you want to set up the system yourself, you can find the code here: AT2-web

You can find more information in our showcase: AT2

Garfield – Byzantine Machine Learning

Machine Learning (ML) is all over the place these days, mostly as Artificial Intelligence (AI) in the form of Large Language Models (LLM). While these models are astoundingly powerful for everyday tasks, there are other models better suited for specific tasks related to specific data. In the context of using machine learning on private or sensitive data, several problems pop up. One of the first is that the holders of the data often do not want to send their data to a centralized server for training.

There is a solution to this problem by using decentralized machine learning. This allows several nodes to train a model using their local data. Only at the end are these models combined into a common model. However, this poses new problems: how much of the local data is present in the common model? And, more importantly: how can we avoid bad actors in the system who may wish to corrupt our common model?

To answer these questions, Garfield was developed at Prof. Rachid Guerraoui’s Distributed Computing Lab, at EPFL. Garfield focuses on Distributed ML, which is a way to use multiple machines that work together on training a model with data. When working with several machines concurrently, the risk of failures increases. This can negatively impact the computed results if the system is not ready to handle them. Using Garfield, developers can easily build applications that are tolerant to such failures and still give meaningful results.

|

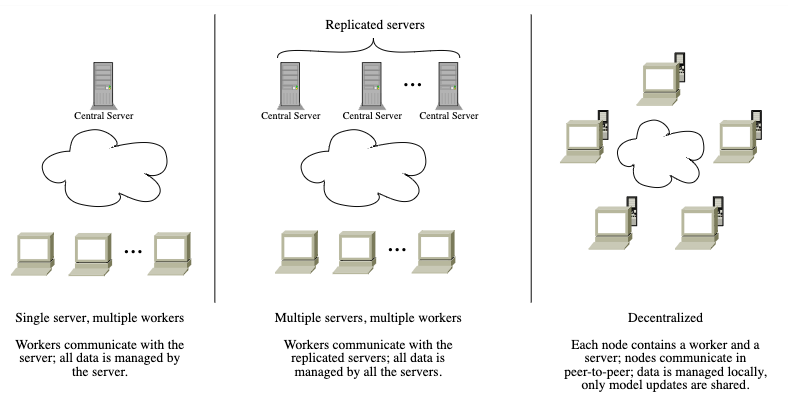

| Three different systems supported by Garfield – the Decentralized model is the most privacy-preserving one. |

This demonstrator focuses on decentralized architecture (the third diagram above). It allows you to examine how learning evolves when more machines are added, the impact of failures, and how Garfield helps to manage them. If you want to try out the demonstrator locally on your machine, you can follow the instructions here: README-demo

A similar architecture is available from Prof. Martin Jaggi’s Machine Learning and Optimization Laboratory. It is called Disco, and the C4DT Factory team also participated in the development of this framework.

You can find more information in our showcase: Garfield

LightArti – Using Tor in Mobile Apps

No screenshot for this market artifact, as it’s a library for mobile devices. When developing the SwissCovid application, a future idea was to be able to send statistics to a server. However, there were privacy restrictions which made this complicated to do. So we wanted to use the Tor network, which allows us to hide the sender of a packet. However, all Tor libraries and applications for mobile devices were either difficult to install by the end user, or very bandwidth hungry.

This is why we built LightArti, which reduces the amount of bandwidth necessary to communicate with the Tor network. Instead of consuming around 100MB per week, our solution only uses around 1MB per week. It offers a simple API to the programmer to do GET and POST requests to the server. This makes it possible to send statistics in an anonymous manner to a server, or get generic configuration from the server.

It is based upon the excellent work on arti, a re-write of the Tor client in Rust to improve safety for its users. During the 2 years of the market project, we updated the software and made sure it runs on the latest Tor versions. As nobody used LightArti in a production environment, we decided to stop supporting it, according to our policies on the C4DT Factory Market.

You can find more information in our showcase: LightArti

Ongoing Projects

The Factory never sleeps – well, kind of 🙂 We’re currently evaluating new projects that we could offer as public web services. Martin Jaggi’s lab continues to develop the above-mentioned Disco – more precisely, a former C4DT RSE continues their excellent work on this project..

One of the bigger projects we are working on is the EPFL D-voting system, which is now live and being used in various EPFL-related elections and polls. It’s currently customized for EPFL, but if there is interest, we can work on a generic version.

Other projects we’re currently working on are hands-on workshops for our partners. Two upcoming: one on E-ID and privacy, and another one on deepfakes. Contact us for more info!